Models

IOPaint support many models out of the box. These models can be categorized into three groups.

Erase Models

The following models can be used to remove any unwanted object, defect, watermarks, people.

- 🌟 LaMa: Recommend

- 🌟 MAT: Recommend

- 🌟 MIGAN: Recommend. Minimal in size, requiring the least amount of resources.

- LDM

- ZITS

- FcF

- Manga

Stable Diffusion Models

You can use any Stable Diffusion Inpainting(or normal) models from Huggingface (opens in a new tab) in IOPaint.

Simply add --model runwayml/stable-diffusion-inpainting upon launching IOPaint to use the Stable Diffusion Models.

Some other popular models include:

- runwayml/stable-diffusion-inpainting (opens in a new tab)

- diffusers/stable-diffusion-xl-1.0-inpainting-0.1 (opens in a new tab)

- diffusionbee/fooocus_inpainting (opens in a new tab)

- andregn/Realistic_Vision_V3.0-inpainting (opens in a new tab)

- Lykon/dreamshaper-8-inpainting (opens in a new tab)

- Sanster/anything-4.0-inpainting (opens in a new tab)

Load ckpt/safetensors

You can load the single file(ckpt/safetensors) Stable Diffusion models in IOPaint.

Create two folders in the model_dir directory, stable_diffusion and stable_diffusion_xl.

- model_dir

|- stable_diffusion

├── sd-v1-5-inpainting.ckpt

├── sd-v1-5-inpainting.safetensors

└── v1-5-pruned-emaonly.safetensors

|- stable_diffusion_xl

├── sd_xl_base_1.0.safetensors

└── sd_xl_base_1.0_inpainting_0.1.safetensorsLoad local diffusers model

You can use the convert_original_stable_diffusion_to_diffusers.py (opens in a new tab) script to

convert single file ckpt/safetensors into diffusers format. And put the converted model directory into the model_dir/stable_diffusion or model_dir/stable_diffusion_xl directory.

The benefit of this is that it eliminates the time required for model conversion when loading a single file, and also saves memory during service startup.

The drawback is that the converted model directory may occupy more disk space. Here is an example of directory structure:

- model_dir

|-stable_diffusion_xl

├── converted_sdxl

│ ├── model_index.json

│ ├── scheduler

│ ├── text_encoder

│ ├── text_encoder_2

│ ├── tokenizer

│ ├── tokenizer_2

│ ├── unet

│ └── vae

├── sd_xl_base_1.0.safetensors

└── sd_xl_base_1.0_inpainting_0.1.safetensorsCropper

You can use Cropper to perform inpainting operations on only a specific area.

Expander

You can use Expander to enlarge the size of the image(outpaining).

Outpainting using PowerPaint

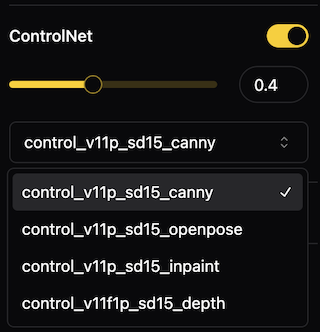

ControlNet

You may activate the usage of ControlNet within the web interface and select which ControlNet model to utilize.

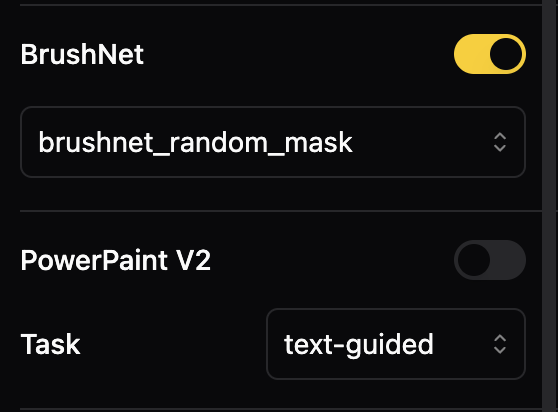

PowerPaintV2 and BrushNet

PowerPaintV2 and BrushNet can turn any sd1.5 model into an inpainting model.

LCM LoRA

Enable quality image generation in typically 2-8 steps. Suggest disabling guidance_scale by setting it to 0. You can also try values between 1.0 and 2.0. When LCM Lora is enabled, LCMSampler will be used automatically.

Mask Blur

Blur the mask before processing, make the generated inpainting boundaries appear more natural.

Match Histograms

Match the inpainting result histogram to the source image histogram, it is possible to enhance the naturalness of the generated result.

Other Diffusion Models

- PowerPaint: With the concept of "learnable task prompts", PowerPaint can achieve specific image editing tasks more effectively. These tasks include text-guided, shape-guided, object-remove, and outpainting.

- AnyText: Generate text on images that aligns with the style of the image and the prompt.

- Paint by Example: Use an example image to guide the model to generate similar content on the image.

- InstructPix2Pix: Use a text instruction to edit the picture.

- Kandinsky: Kandinsky inherits best practices from Dall-E 2 and Latent diffusion while introducing some new ideas.